AI has become scarily good at sounding right. Ask ChatGPT, Claude, or Gemini a complex question, and you'll get an answer with examples, analogies, and a confident, balanced tone. It feels authoritative. Complete. Final.

And that's exactly the problem! Its designed to make you feel satisfied, not informed.

Here’s the hidden truth: AI answers systematically skip over the edge cases, the contradictions, the deeper “what-ifs.” This isn't an accident; it's by design.

Most AI models are trained with something called Reinforcement Learning from Human Feedback (RLHF), which is basically a giant popularity contest for AI answers. Human raters vote on which answer they like best, and the AI learns to create answers that look like the winners.

Humans prefer confidence over honesty. " One study found that when an AI admits "I'm not sure," users rate it poorly—even when that uncertainty is the most accurate response.

So, the AI learns a simple lesson:

Always sound confident. Wrap everything up neatly. Give a feeling of closure

For simple homework or trivia, that's fine. But when you need the whole truth for deep research, this helpfulness becomes a hidden trap.

How to See What's Missing

So how do you fight an invisible problem?

This is why we built Critique in Mnemosphere. The process is deceptively simple:

- Ask your question and get a response from any AI model.

- Read the answer to get your baseline understanding.

- Then, simply click ‘Critique Response’ icon.

For even better results, you can ask one AI for the answer (like GPT-5) and a different AI (like Claude 4.5 Sonnet) to critique it. This is like getting a second opinion that helps you escape the first model's inherent biases.

Let's see the profound difference Critique makes with a real example.

The Prompt: "What is the best productivity method?"

We asked ChatGPT, and it gave the classic, perfect-sounding answer. It's helpful, well-structured, and covers the famous frameworks:

Summary of ChatGPT's Answer:

It feels comprehensive. It's actionable. You'd normally stop here, feeling fully informed and ready to try one of these methods.

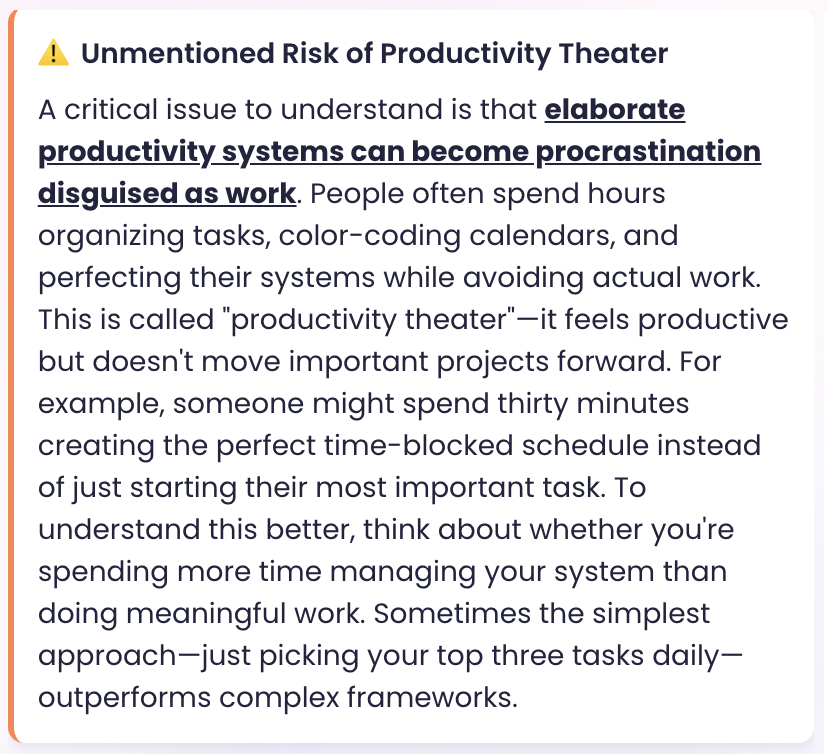

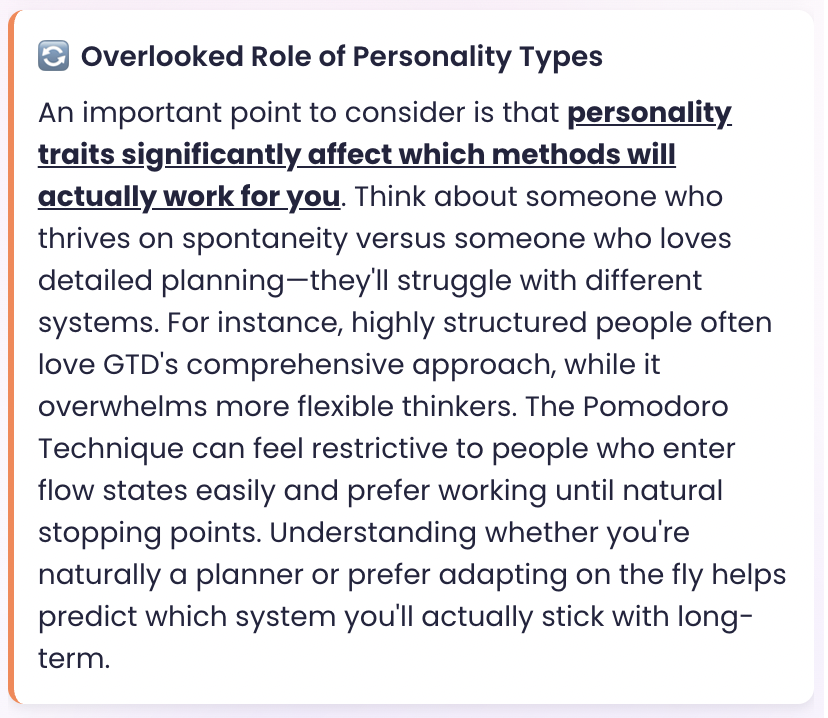

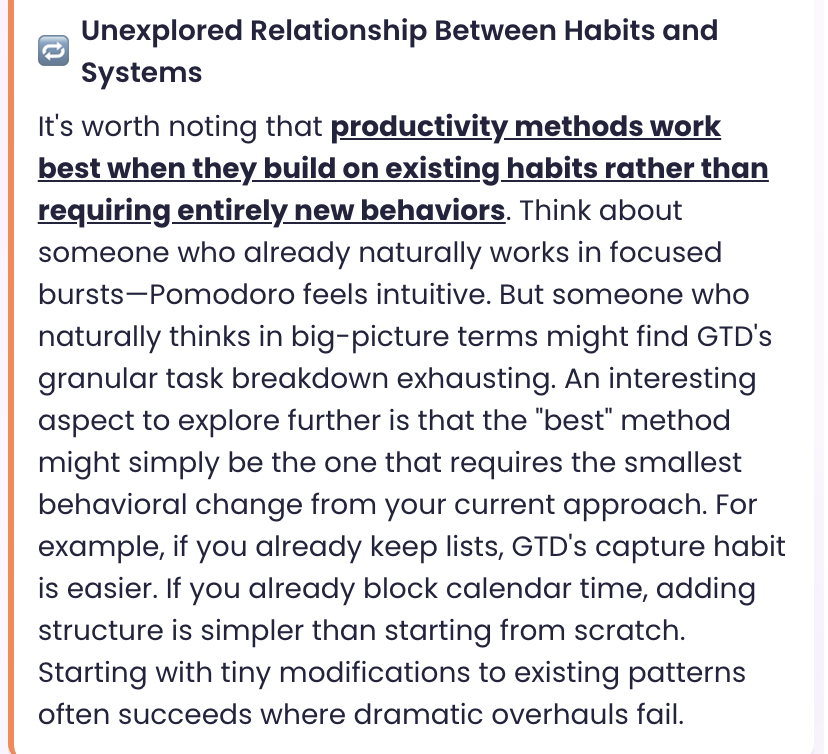

But when you click ‘Critique’, you see what the AI left out.

See what just happened?

With one click, you went from a superficial, "balanced" view to a deep, nuanced understanding.

You started with a simple list of tactics. You ended with a smarter way to think about your own productivity—considering your personality, energy levels, work environment, and the true meaning of "effective" work.

Stop Building Your Knowledge on Quicksand

When an AI answer sounds complete, your brain stops questioning. That’s how you build false confidence.

Don't settle for the illusion of completeness. Use Critique to see the full picture.

Try Mnemosphere and discover what every AI answer isn't telling you.